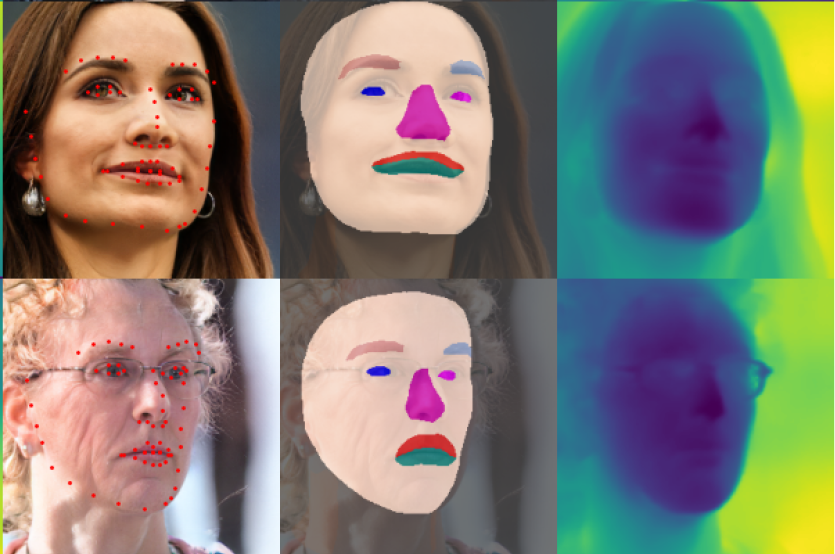

Samples from generated dataset with different available annotatoins (keypoints, depth, segmentation) with high degree of photorealism.

Abstract

Recent advancements in generative models have unlocked the capabilities to render photo-realistic data in a controllable fashion. Trained on the real data, these generative models are capable of producing realistic samples with minimal to no domain gap, as compared to the traditional graphics rendering. However, using the data generated using such models for training downstream tasks remains under-explored, mainly due to the lack of 3D consistent annotations. Moreover, controllable generative models are learned from massive data and their latent space is often too vast to obtain meaningful sample distributions for downstream task with limited generation. To overcome these challenges, we extract 3D consistent annotations from an existing controllable generative model, making the data useful for downstream tasks. Our experiments show competitive performance against state-of-the-art models using only generated synthetic data, demonstrating potential for solving downstream tasks.

SynthForge Summary

SynthForge harnesses cutting-edge 3D generative models to create realistic, annotated facial datasets, empowering downstream facial analysis tasks with synthetic data that rivals real-world data in performance.

Key Features:

- Advanced Generative Technology: Utilizes controllable 3D generative models for high-fidelity data synthesis.

- 3D Consistent Annotations: Provides detailed, consistent 3D annotations crucial for accurate facial analysis.

- State-of-the-Art Performance: Achieves competitive results against leading models using solely synthetic data.

- Domain Gap Minimization: Offers realistic samples with minimal domain gaps, enhancing model reliability and applicability.

- Resource Efficiency: Reduces the need for extensive real-world data collection and manual annotation.

Overall Approach

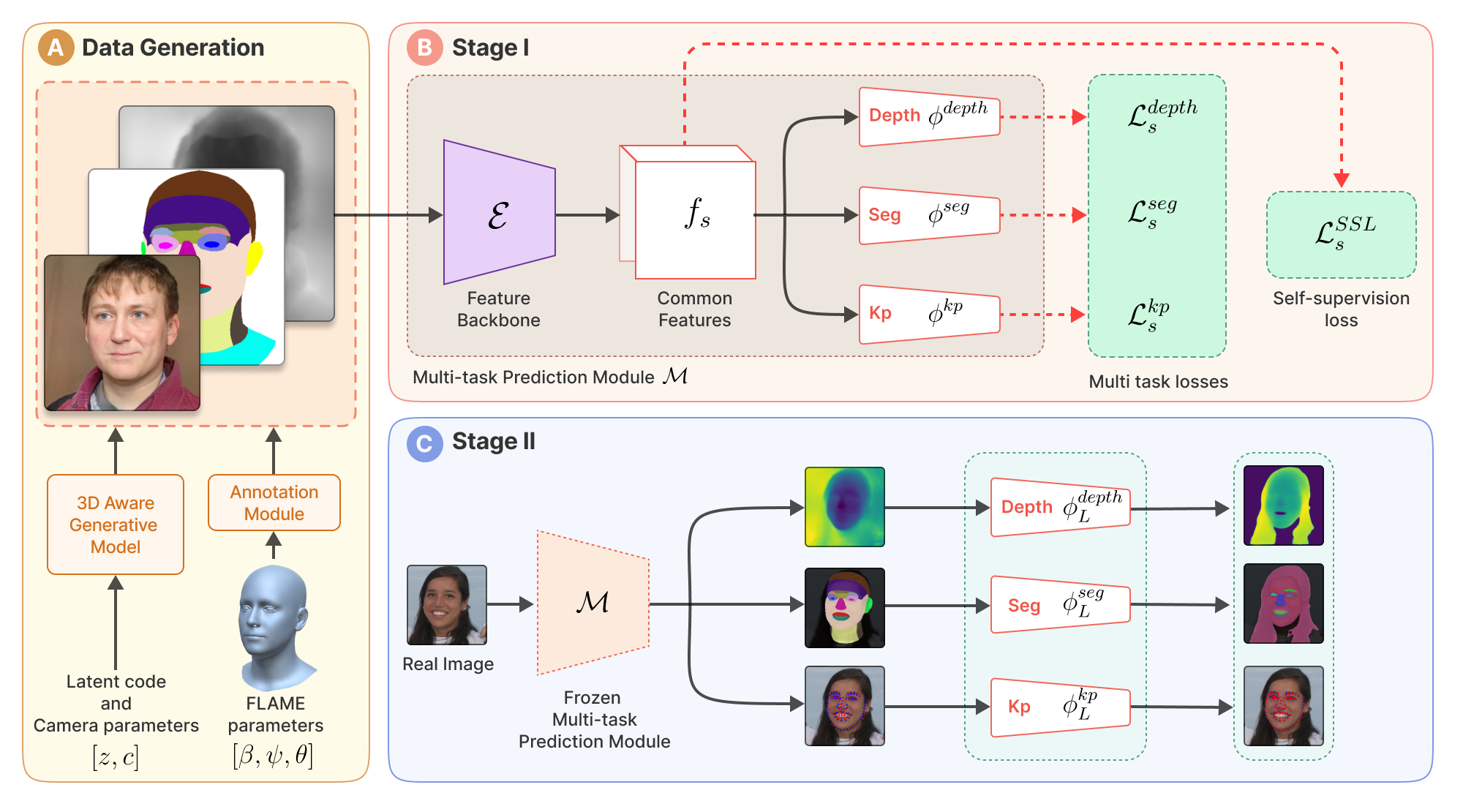

The approach depicted in the diagram involves a multi-stage process for generating and utilizing synthetic facial data. First component (A) focuses on generating photorealistic facial images using a 3D-aware generative model, which are then annotated with features such as depth, segmentation, and key points. This data feeds into a (B) multi-task prediction module that processes common features and computes losses for depth, segmentation, and keypoints, incorporating a self-supervision loss. Stage II (C) utilizes the pre-trained multi-task prediction module to analyze real images, employing the learned tasks to predict detailed facial attributes, ensuring the model's applicability to real-world scenarios. This methodology demonstrates an effective loop from synthetic data training to practical real-world application.

Overall Approach

Sample images from generated dataset

The samples highlight depth, semantic segmentation, and facial keypoints

The dense annotations avaialble are useful for multi-task training

BibTeX

@misc{rawat2024synthforge,

title={SynthForge: Synthesizing High-Quality Face Dataset with Controllable 3D Generative Models},

author={Abhay Rawat and Shubham Dokania and Astitva Srivastava and Shuaib Ahmed and Haiwen Feng and Rahul Tallamraju},

year={2024},

eprint={2406.07840},

archivePrefix={arXiv},

primaryClass={id='cs.CV' full_name='Computer Vision and Pattern Recognition' is_active=True alt_name=None in_archive='cs' is_general=False description='Covers image processing, computer vision, pattern recognition, and scene understanding. Roughly includes material in ACM Subject Classes I.2.10, I.4, and I.5.'}

}